Virtual companions, real responsibility

Safe use of large language models in medicine

Creating and complying with

In the journal “The Lancet Digital Health”, researchers led by Professors Stephen Gilbert and Jakob N. Kather from EKFZ for Digital Health provide an overview of current health applications based on large language models (LLMs), their strengths and weaknesses and the regulatory challenges. They call for a framework that recognizes the capabilities and limitations of these AI applications and emphasize the urgent need to enforce existing regulations. A continued hesitant approach on part of authorities not only endangers users, but also the potential of future LLM applications in medicine.

Oscar Freyer, Isabella Catharina Wiest, Jakob Nikolas Kather, Stephen Gilbert: A future role for health applications of large language models depends on regulators enforcing safety standards; The Lancet Digital Health, 2024.

Opportunities and risks of LLM-based healthcare applications

Applications based on artificial intelligence (AI) are already indispensable in medicine. LLMs such as GPT-4 offer a wide range of possibilities for supporting the diagnosis, treatment and care of patients. At the same time, there are concerns regarding their safety and regulation. Results are often variable, lack explainability and carry the risk of hallucinations. The approval of LLM-based applications for medical purposes as medical devices under US and EU law poses challenges for regulatory authorities. Despite the risks for users, such as misdiagnosis or unverified medical advice, various applications are already available on the market.

Since the introduction of LLMs, developers such as Google, Meta, OpenAI and Microsoft have continuously introduced new and improved models. Their performance in medical applications has also improved. “LLMs have the potential to transform healthcare and will become increasingly important in the areas of cancer diagnosis and treatment, as well as screening, remote care, documentation and clinical decision support. These models offer great potential, but also harbor risks,” says Prof. Dr. med. Jakob N. Kather, Professor of Clinical Artificial Intelligence at the EKFZ for Digital Health at TU Dresden and oncologist at the University Hospital Carl Gustav Carus in Dresden. Intensive research is still being carried out to determine whether the advantages outweigh the disadvantages in medical applications. In addition to the many possibilities, the researchers clearly point out open legal and ethical questions in their publication, particularly with regard to data privacy, the protection of intellectual property and the problem of gender-specific and racial biases.

Jakob N. Kather

LLMs have the potential to transform healthcare and will become increasingly important in the areas of cancer diagnosis and treatment, as well as screening, remote care, documentation and clinical decision support. These models offer great potential, but also harbor risks.

Stephen Gilbert

These technologies are already with us, and we need to have two things together to ensure their safe development. The first are appropriate methods to evaluate these new technologies. The second is the appropriate enforcement of current legal requirements against clear cases where unsafe LLM apps are on the market. This is essential if we want to use these medical AI applications safely in the future.

Approval as medical devices required

As soon as applications offer specific medical advice on the diagnosis or treatment to laypersons, they are medical devices under both EU and US law and as such require regulatory approval. While compliance with these regulations was relatively clear for previous, narrowly defined applications, the versatility of LLMs poses regulatory challenges for the authorities. Although there are some legal ambiguities, there are also clear cases of unapproved unregulated products being on the market. The researchers make it clear that the authorities have the responsibility to enforce the applicable rules. At the same time, they should ensure that appropriate frameworks for testing and regulating health applications based on LLMs are developed. “These technologies are already with us, and we need to have two things together to ensure their safe development. The first are appropriate methods to evaluate these new technologies. The second is the appropriate enforcement of current legal requirements against clear cases where unsafe LLM apps are on the market. This is essential if we want to use these medical AI applications safely in the future,” says Prof. Dr. Stephen Gilbert, Professor of Medical Device Regulatory Science at the EKFZ for Digital Health at TU Dresden.

More News

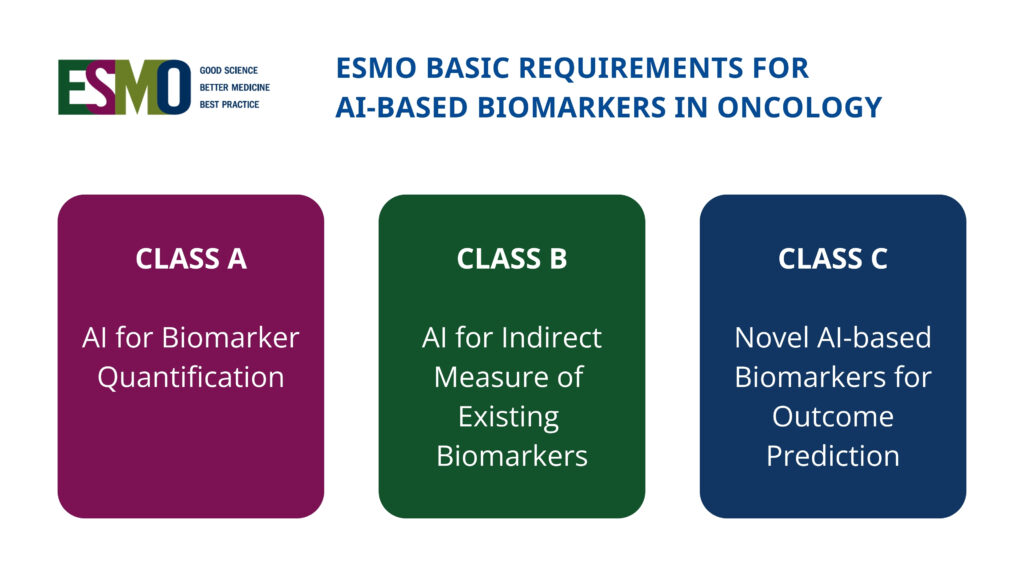

New international framework defines standards for AI-based biomarkers in oncology (EBAI)

New ESMO Guidelines: Safe use of large language models in oncology