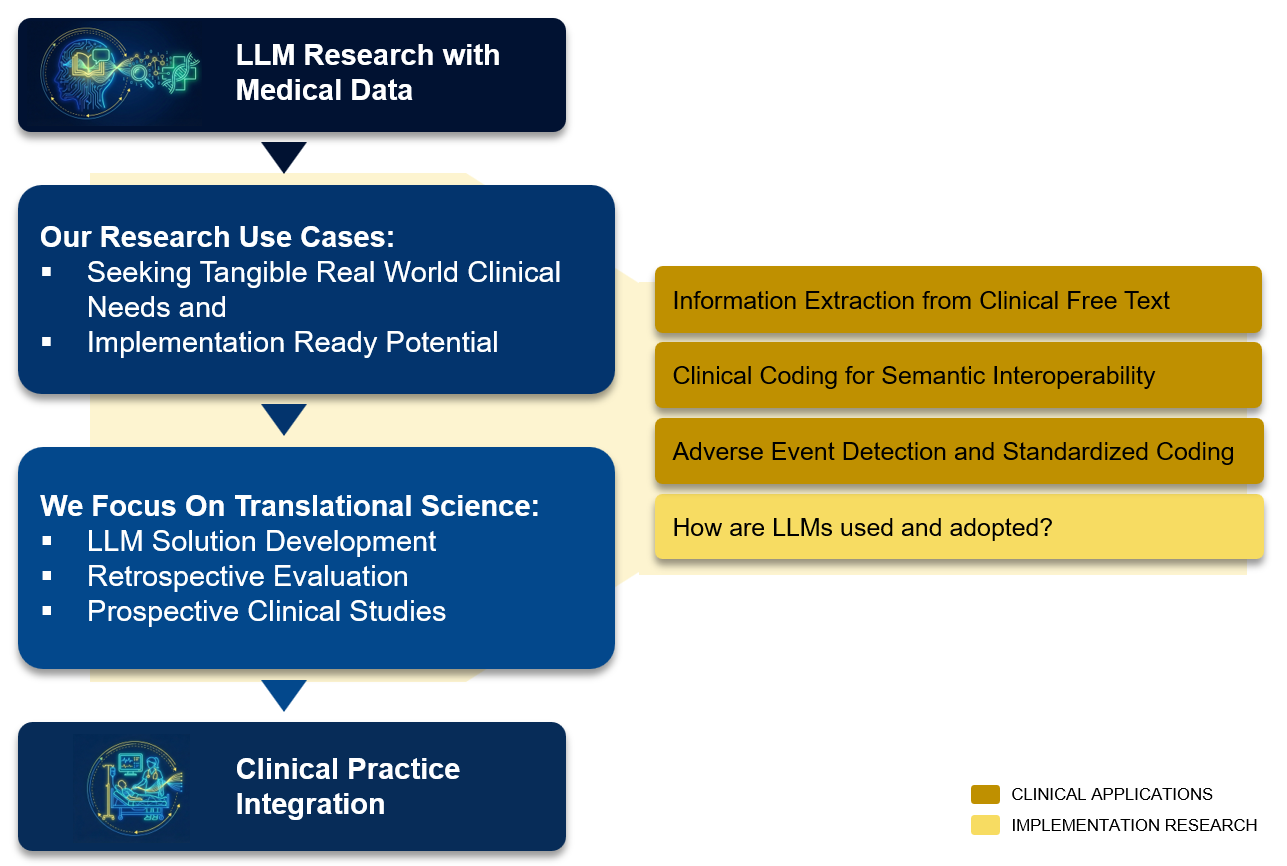

Our work generates evidence on how LLM-based tools can be safely integrated into clinical workflows and improve patient care.

Our research group develops and evaluates privacy-preserving large language model applications for clinical practice. We design locally deployable systems for medical documentation, information extraction, and clinical coding across multiple specialties including oncology and gastroenterology. Through rigorous retrospective and prospective clinical studies, we assess documentation quality, workflow integration, and real-world feasibility. Our work generates evidence on how LLM-based tools can be safely integrated into clinical workflows and improve patient care.

AI innovation and clinical implementation

Our research focuses on bridging the gap between artificial intelligence innovation and clinical implementation. We develop and evaluate privacy-preserving large language model (LLM) applications that can be deployed locally within hospital infrastructure, ensuring patient data security while enabling advanced natural language processing capabilities. Our previous works focus on systems that structure unstructured medical text for clinical documentation, information extraction, and standardized coding across diverse medical specialties. What distinguishes our approach is the emphasis on clinical evaluation: we combine retrospective analyses with prospective studies embedded in real-world clinical workflows to assess not only technical performance but also practical feasibility, clinician adoption, and impact on patient care quality.

Detecting Patient Safety Risks Automatically While Reducing Clinical Paperwork

A key example of our work is the automated detection and standardized coding of adverse events from free-text clinical documentation. In this project, LLMs identify safety-relevant events in routine clinical texts such as procedure reports and clinical notes, mapping them to MedDRA terminology for pharmacovigilance. The system undergoes both retrospective validation and prospective evaluation within active clinical workflows, measuring coding accuracy, completeness of adverse event capture, and usability in daily practice. Through partnerships with university hospitals and research networks, we conduct clinical studies that generate the evidence base needed to responsibly integrate AI tools into healthcare delivery, ultimately aiming to improve patient safety monitoring while reducing administrative burden on clinical staff.

Recent publications

A software pipeline for medical information extraction with large language models (LLM-AIX)

Introduces an open-source, modular LLM pipeline for structured information extraction from free-text medical records, validated across oncology and other clinical domains and designed for downstream ML applications.

Deidentifying medical documents with local, privacy-preserving large language models (LLM-anonymizer)

Presents a locally deployable LLM-based system for medical text de-identification that preserves privacy, avoids cloud dependency, and achieves high-quality anonymization suitable for real-world clinical data sharing.

Deep Sight: enhancing periprocedural adverse event recording in endoscopy

Demonstrates how privacy-preserving LLMs can structure endoscopy reports to systematically capture periprocedural adverse events, improving data completeness and safety monitoring without increasing documentation burden.

Dr. med. Isabella Wiest, M

EKFZ for Digital Health, Medical Faculty Carl Gustav Carus, TUD Dresden University of Technology